3 data-modeling problems with Marketing Attribution, and 1 way to better understand marketing value

Marketing Attribution suffers from subjective scoring models, low-information feature sets, and measuring data at the wrong level. There is a better way.

The attraction of Marketing Attribution

The goal of marketing attribution is to optimize investment in the marketing mix in order to enhance marketing performance. Last year, according to eMarketer, 83% of companies applied marketing attribution efforts to their digital marketing, and more than half did so for all marketing efforts. While such broad adoption seems difficult to believe, there are a number of good reasons to consider marketing attribution:

- It is usually better to take a quantitative approach than a solely qualitative approach to systemic optimization;

- There are large volumes of certain types of marketing data on hand for most marketing organizations, so it may be possible to deal in statistically significant sample sizes;

- The outcome of a successful marketing attribution model has such strong potential that it almost seems remiss to not try it.

Defining terms: what is Marketing Attribution?

It is clearly true that there are optimizations to the marketing mix that can be achieved through observing data and drawing conclusions from the data. Imagine you were running a digital advertising campaign with some traffic on Facebook and some traffic on Google, it would not take a great deal of effort to begin to quantify which investment was driving the highest return. This type of effort falls closer to the realm of common sense than to mathematical model, and it should be done as a matter of routine.

So, I should clarify that for the purpose of this article – and in general – I don’t subscribe to the definition of Marketing Attribution that broadly encompasses all and any efforts to enhance marketing performance, which some are beginning to adopt. Rather, I am focused on the supposedly scientific approach of building attribution models of sophisticated buyer journeys, and being directed by their outputs.

My contention is that nearly all of these models are doomed to failure because they are either too channel-focused (vs. campaign-focused) or too channel-biased (e.g. they capture lots of digital data and very little offline data) to accurately model buyer behavior. Consequently, with few exceptions, they yield either generic, high-level conclusions that don’t warrant the level of effort, or such localized tactical conclusions that they are not generalizable.

This in turn raises the question of whether the levels of effort and expense currently being invested in the space are well spent.

Reasons to be skeptical. 1, 2, 3…

My background is in both big and small data analysis. For more than 25 years, before joining Planful, I worked in speech and language AI. My initial skepticism about Marketing Attribution has hardened into a rejection of the premise that a causal relationship can be established between touchpoint data – the lifeblood of most models – and buyer behavior. The paucity of success stories at least anecdotally supports skepticism. Published case studies are normally narrow, and unconvincing. And while I’m certainly not the first to hold this view (c.f. this excellent article by Sergio Maldonado), the flourishing Marketing Attribution market indicates that I remain in the minority.

There are many advocates of Marketing Attribution who acknowledge it’s not an exact science. But my concern is that it may not be a science at all: that it can’t yield reliable, generalizable insights because there are too many external variables to control for and too much bias in the process, leading to inherently broken models. In a controlled environment, with perfect insight into each customer’s journey and exposure to marketing messages, it seems plausible that reliable models could be created.

But that is not the environment we live in, in fact with evolving privacy regulation and exploding channel complexity, that environment is moving further and further away from us. I have chosen to focus on 3 of the major problems that I perceive from a data modeling perspective: Causality, Bias and Feature Selection. There are others that might warrant discussion in a later post.

Finally, I share my view on simpler, more effective methods marketers should consider in order to measure and improve their marketing investments to achieve better outcomes. I’d love to hear your feedback.

Challenge 1: causality

Source:https://xkcd.com/552

Correlation does not imply causation. This is a well-worn truth, but the instinct to assign meaning is so strong that we all struggle to overcome it sometimes. Our ability to spot patterns and infer insight from them is human nature. It’s an evolutionary advantage (e.g. if you see a couple of people die after being bitten by a snake, you tend to avoid all snakes) but it can lead to some big mistakes.

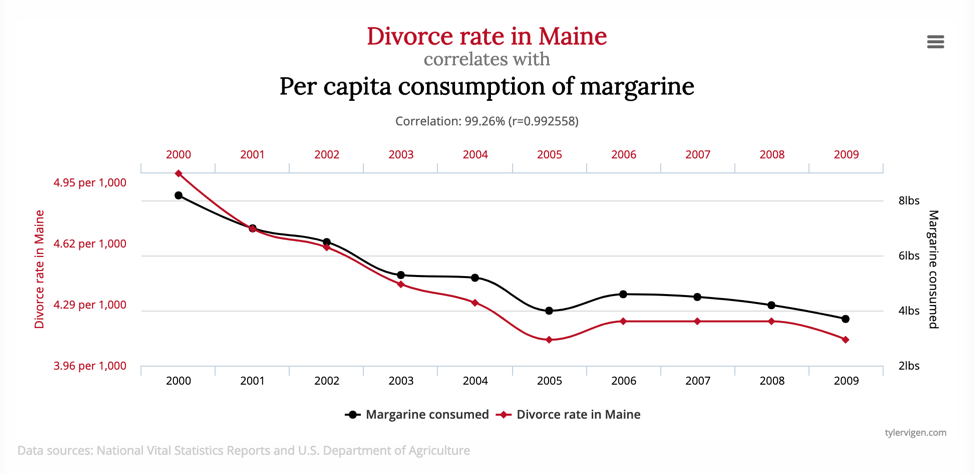

One interesting example I found on Stack Overflow: there is a correlation that shows that the more firefighters that are sent to extinguish a fire, the more damage the fire does. Common sense tells us that this is because you need more firefighters to put out large, damaging fires. It would be disastrous to conclude that you’d see less fire damage if you sent fewer firefighters (or for that matter, none) But when cause and effect are less obvious to us, or even hidden from us, it can become extremely challenging to know where the true cause of variance lies, or whether we are dealing with a spurious correlation of the type that Tyler Vigen illustrates so well.

Marketing attribution operates on the basis that correlation implies causation. This issue is exacerbated by the 2ndfundamental flaw in Marketing Attribution: Bias

Challenge 2: bias

Attribution bias has been defined asa cognitive bias that refers to the systematic errors made when people evaluate or try to find reasons for their own and others’ behaviors.

Significance Bias

The first bias is that the data will yield actionable results. I haven’t seen a marketing attribution report yet that concludes that the results were not useful. Fans of the scientific method love inconclusive results. If all results are interpreted to be meaningful, then we have a bias towards significance that is almost certain to be problematic over time.

Subjectivity Bias

The second bias is in the model construction and scoring, which is heavily subjective. Marketers make subjective decisions about

- Which attribution model to use

- Which touches to ascribe proportional value to

- What value to assign

- What weights to apply to certain scores and channels

These decisions are not taken naively, but the level of bias in the process is compounded and potentially fatal to the accuracy of the model. Imagine you worked at a company with a large marketing team of top performers. If you took 10 of them and tasked them to create attribution scoring models for the same customer journey, how similar do you think the scoring and weighting models would be? Since each individual’s experiences would inevitably drive them to different interpretations – not very.

Add to the mix that those individuals have a vested interest in the outcome. The digital demand gen lead is going to have conviction that digital contributes a large proportion of the value. But the PR lead will know it’s the media coverage that really does the trick. The product marketer knows that none of that is valuable without their collateral. For any attribution model, there is an unknowable bias distortion baked in. The results of a subjectively constructed model therefore have to be viewed as uncertain. While some might start to yield valuable results with iteration, it’s impossible to know a priori whether you have a workable starting point or not.

The approach of building expert-based scoring models and then bootstrapping data-driven models from their outputs is actually a pretty good one. But it requires:

- (1) an objective truth

- (2) an understanding that any unmeasured data can’t completely change the outcome (such as confounding variables).

Marketing attribution does not have an objective truth or ideal, and it can’t capture all the variables that contribute to buyer behavior. There is little evidence that the variables it does capture are reliably predictive.

Confirmation Bias

The third kind of bias we need to consider is confirmation bias. The human tendency to minimize data that we disagree with and emphasize data that supports our preconceptions is very well documented.

Confirmation bias is usually overcome by seeking disconfirming evidence – that is, evidence that challenges the bias. Assuming we can overcome people’s natural disinclination to address confirmation bias, what types of disconfirming evidence might we consider for Marketing Attribution? To answer that, we will look at my 3rdconcern.

Challenge 3: feature selection (& tuning)

Feature selection is a well-known term in statistical modeling, including machine learning. Interestingly, it is sometimes called attribute selection. The intent is to identify the most information-rich features from a set. Imagine two models that yield similar results. One of them is trained on 1,000 features and one is trained on 10. It is likely that the 1,000 variable model has not gone through a good feature selection process, meaning it has many features that aren’t contributing much to the results. It, therefore, consumes more data and processing power than the simpler model, as well as somewhat obfuscating the most descriptive variables.

Now imagine there are two 10-feature models, and one performs 60% better than the other. That would imply that the worse model has either gone through a bad feature selection process, or that there is data available to the better model that is simply not available to the other.

Earlier in my career, when we were training speech recognition engines or language models, we gathered large volumes of data, and trained algorithms to extract features that explained the data and that we could turn into predictive models to recognize speech we had not encountered before. You could tell if you had a good model by measuring how well it recognized speech it hadn’t been trained on. If our model performed poorly, it might be because we were training on the wrong set of features, or the weighting of those features was wrong. Or it might be that we didn’t yet have sufficient data to represent the language.

The way we could tell how we were doing is by using a consistent set of test data to measure against. The test data was valuable because it gave us an objective ground truth. We knew that if we could get good results on the test data, we were likely to get good results on previously unseen data.

Marketing attribution has a drastic feature selection and tuning challenge. There is a lot of data that it does not – often cannot – include, such as:

- Ad fraud – ad injections, hidden impressions, bots, ad stacking, fraudulent clicks, and the various other bad practices in digital channels create a highly noisy signal for marketers to analyze

- Quality of Content – in Made to Stick, Chip and Dan Heath explore why some stories and ads are effective and some aren’t. They use a great example of how Subway’s TV ads were relatively ineffective when they emphasized the nutritional content of their sandwiches vs other fast food types, but became hugely successful when they told the emotional story of a customer who had lost significant weight by eating Subway sandwiches. The channel and frequency of touchpoints were the same, but the content wildly changed the results. With ad networks auto-optimizing creative, the huge variety of content required at any moment, and the need for creative to be refreshed so frequently, it’s an almost impossible task for marketers to account adequately for the impact of content on channel performance and take action on it.

- Buyer mindset – a repeat-buyer, a first-time buyer, a buyer from a friend’s recommendation, a buyer from a classic marketing path from awareness to purchase all represent highly different buyer mindsets. While it is likely that those buyers may have different customer journeys to the purchase point some of the time, it’s also highly likely their journeys will overlap in ways that are indistinguishable to the model.

- Hidden touchpoints – Let’s use digital as the base case for marketing attribution. Non-digital channels such as radio, billboard, print media, direct mail, word of mouth, TV, and events are opaque to the model. While these touchpoints still represent more than half of all B2B marketing program spend, they can’t be accurately modeled. It is unknowable what an online customer has seen prior to or during an online customer journey. So, if you want to give the penultimate touchpoint 40% of a lead value in your customer journey, how do you even know what the penultimate touchpoint was? And what does it mean for all the times you gave the wrong touchpoint that value because it happened to be only the digital ones that you could see?

- Customer tracking – the regulatory landscape has lurched in favor of privacy through initiatives such as GDPR. Commercial platforms are beginning to self-regulate. Consumer expectations of privacy are increasing continuously. The viability of accurately tracking customers between channels and platforms is decreasing rather than increasing over time. And this is going to accelerate.

- The talent and experience of the individuals executing the campaign – if this didn’t make a difference, we wouldn’t bring in experts and agencies to help with our marketing campaigns and events.

I think it is intuitively obvious that the data points above would have more impact on marketing outcomes than the sequence of ads a consumer sees across a partial customer journey. At what point do we step away from the model that we’ve constructed from the available breadcrumbs and consider a different approach.

When you look at what is entered into marketing attribution models vs the perfect-world scenario, it is clear that only a thin feature set can be modeled. Tuning a bad model with poor features will probably not yield a good model.

Finally, marketing attribution models don’t have an objective truth. What is a good outcome, objectively? Since marketers don’t know what the optimum marketing mix is (or they wouldn’t need attribution models at all) they don’t have a gold standard to reach for. We don’t even know if models operate at 30% of optimum, or 10%, or 60%. The vast majority of marketers don’t know how they stack up to industry peers (although they would really like to).

For the most part, then, we’re dealing with inherently biased, over-simplified models that are missing important training features, and we don’t have an empirically testable quality level. Why would we make decisions about the largest discretionary spend in our company based on the output of such inherently – almost necessarily – flawed models? It’s like trying to predict a class’s academic performance based on attendance and textbook choice. You can probably glean a little bit of insight, but most of the useful predictive data is missing, so don’t build your education strategy around it.

A Different Approach to Marketing Attribution

At Planful, we think the fundamental questions that marketing attribution asks are not the best ones. What a marketer should care about first is whether their campaigns are delivering business value, indeed whether the entire marketing plan is delivering business value.

What we have discovered is that a number of companies are measuring the wrong things and measuring them hard. Some challenges are cultural. For example, it can be difficult for marketers to map their plan of campaigns and key marketing metrics into the corporate vernacular of finance and sales (GL codes and revenue). Some marketers default to what they can measure, such as vanity metrics that are accessible but low information.

Our Approach: Return on Campaign and Return on Marketing Plan

Our view is that it is better to use the following approach:

- Start with an overall assessment of your top-level goals for the entire marketing plan.

- Define objective metrics for those goals.

- Assess whether the top-level metrics represent a compelling return to the business based on your entire budget.

- Conduct transparent reviews with the CEO and the CFO. If you align on high-level target outcomes at the beginning of the budget period, then you have a common view of success.

- Define thematic, integrated campaigns that directly support the metrics of their key goals. If your largest campaigns don’t directly drive the metrics of your major goals, re-plan and re-align.

- Finally, track the metric achievement and cost consumption of those key campaigns that will achieve your goals. That is what defines your success. You will measure not only outcomes but the cost per outcome. This is why it is so important to manage your budget and plan together. You can then measure the relative performance of your campaigns rather than your channels, and you can determine which types of campaigns should be repeated.

Very few marketing organizations can reliably and holistically demonstrate the relative performance of their campaigns and the overall performance of their marketing plan. Until they can, mix optimization through marketing attribution is a distant secondary factor, and the wrong measurement.

We recommend defining your ROMP (Return on Marketing Plan) in terms of a small number of strategic goals with objectively measurable metrics that really deliver business value. Then, measure relative campaign performance toward those goals, and over time optimize your investments towards the highest performing campaigns.

Latest Posts

Blogs

Interviews, tips, guides, industry best practices, and news.